Facebook said on Wednesday it would take “stronger” action against people who repeatedly share misinformation on the platform.

Currently, users get notified when they share content that has been rated by a fact-checker, but as per Facebook, now these notifications have been redesigned and simplified for better understanding.

“We are launching new ways to inform people if they’re interacting with content that’s been rated by a fact-checker as well as taking stronger action against people who repeatedly share misinformation on Facebook. Whether it’s false or misleading content about COVID-19 and vaccines, climate change, elections, or other topics, we’re making sure fewer people see misinformation on our apps,” the social media giant said in a blog post.

Facebook will also reduce the distribution in the News Feed of posts from individual users who have repeatedly shared false content by the company’s fact-checking partners.

Taking Action Against People Who Repeatedly Share Misinformation https://t.co/GagjE0v7IA

— Facebook Newsroom (@fbnewsroom) May 26, 2021

“We will reduce the distribution of all posts in News Feed from an individual’s Facebook account if they repeatedly share content that has been rated by one of our fact-checking partners. We already reduce a single post’s reach in News Feed if it has been debunked,” Facebook noted.

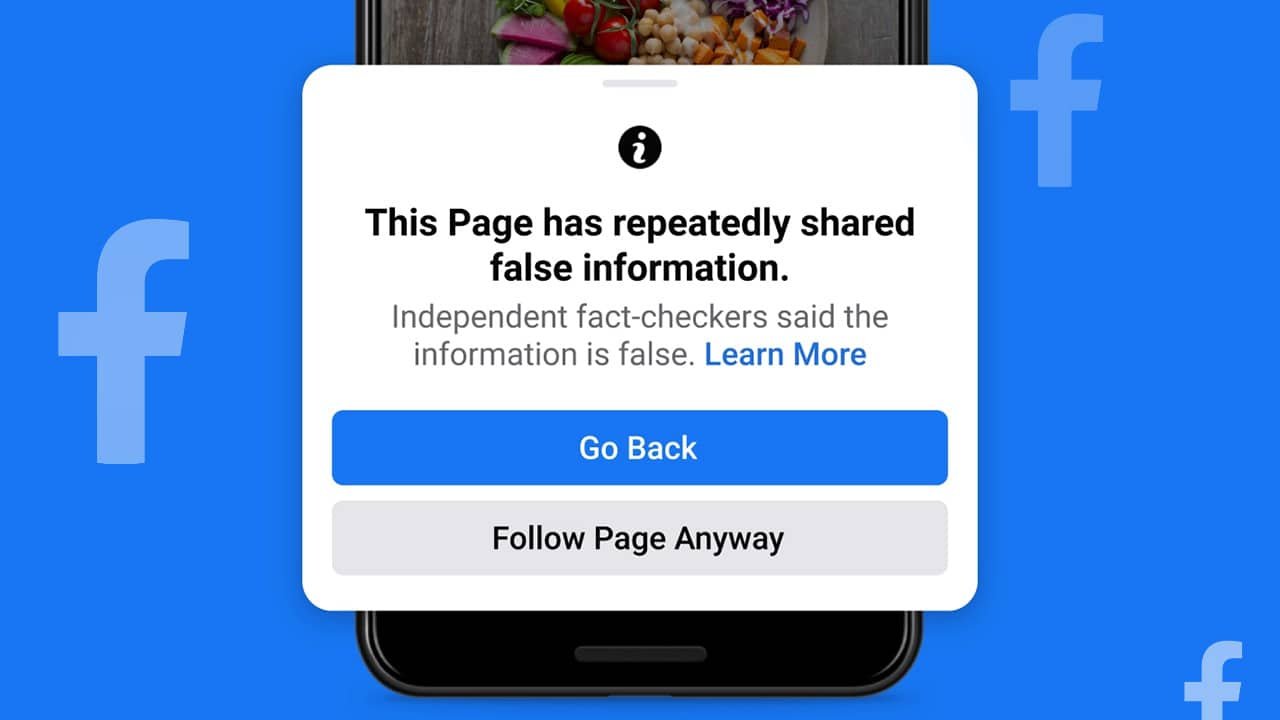

In addition, Facebook is launching a new tool that will let users know if they are interacting with content that has been rated by a fact-checker. “We want to give people more information before they like a Page that has repeatedly shared content that fact-checkers have rated, so you’ll see a pop up if you go to like one of these Pages. You can also click to learn more, including that fact-checkers said some posts shared by this Page include false information and a link to more information about our fact-checking program,” the firm added.